Robot Factory

Research project

Abstract

This project explores the intersection of robotics, generative art, and perception. What began as a casual experiment developed into a broader investigation: how simple robotic behavior can create visual structures, how these structures interact with human perception, and how such experiments connect to the history of artificial intelligence and Gestalt psychology. The research combines hands-on prototyping with historical reflection, rediscovering old ideas in a playful way while turning my workshop into a factory, where cute tiny robots diligently create art.

Red and blue marker pen on plotter paper

60 * 80 cm

The Beginning

In October 2023, I was visiting my friend Felix Fisgus in Bremen. One evening in his studio, over a couple of beers, we decided to build small drawing robots. Both of us had played with simple vibrating drawbots before, but this time we wanted them to behave a bit more deliberately. We used microcontrollers and gearmotors so the machines could follow pre-programmed motion patterns. The resulting drawings were surprisingly interesting.

Since we didn't have any large sheets of paper, we let the robots work on a whiteboard. It didn't take long for the idea of a whiteboard-cleaning robot to emerge. This one followed its own programmed path, wiping away some of the lines. When all the machines ran on the same board at the same time, it looked like a mix of generative art and a mini robot battle. Watching the little creatures do their thing while creating some sort of structured chaos was a lot of fun.

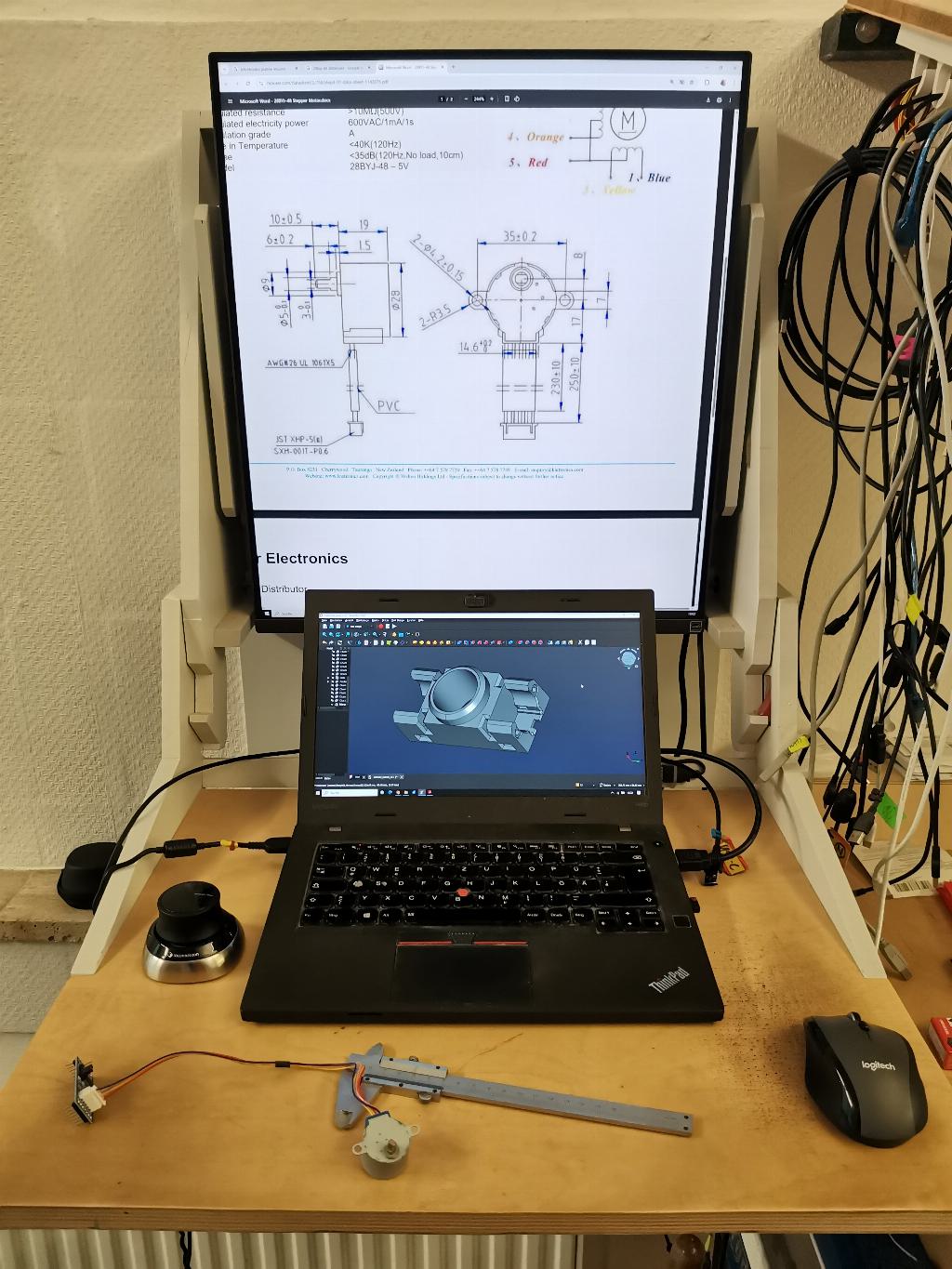

Designing a Tiny Plotter

After that evening, I couldn't stop thinking about the little whiteboard robots. I kept imagining how exciting it would be to control them with real precision—enough to draw predefined vector graphics. My idea was a design with two stepper motor-driven wheels and a pen at the center. A robot with that geometry could rotate on the spot by exact angles and draw lines of an accurate length, turning it into a miniature, general-purpose plotter that could draw on surfaces of infinite size.

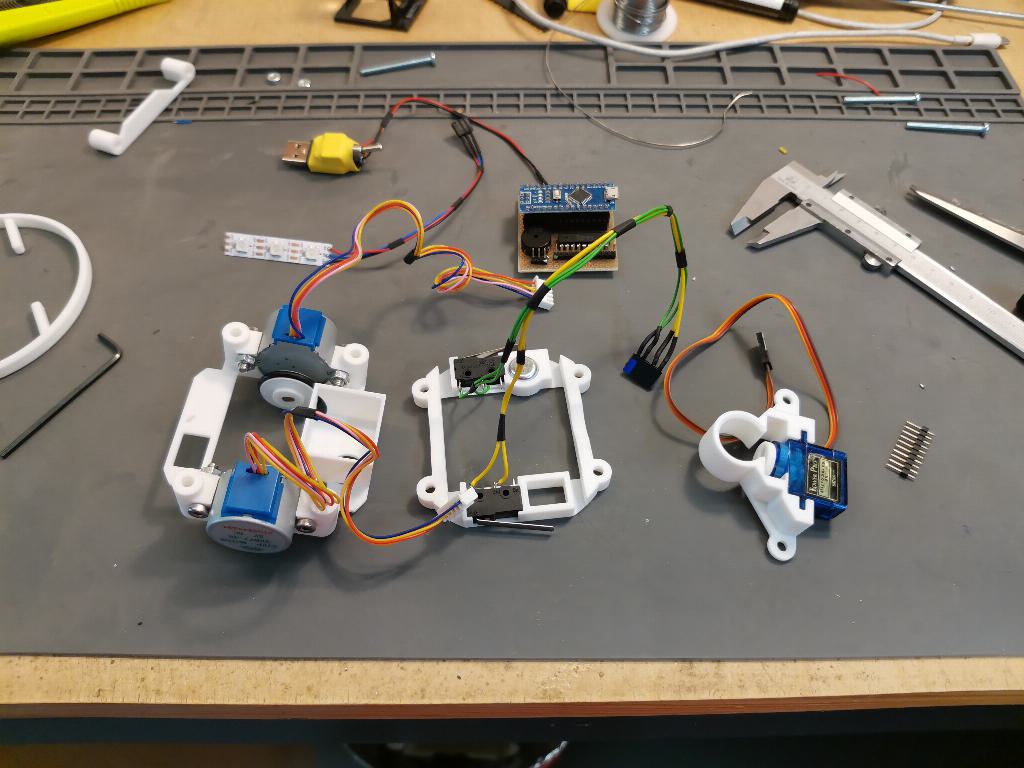

Earlier this year, I wanted to learn FreeCAD, and designing a small, 3D‑printable robot chassis seemed like a good exercise. That led to the first tiny plotter prototype: a small device driven by two cheap stepper motors, with O‑rings from the plumbing aisle serving as tires. The pen could be lifted by a micro servo, and an Arduino Nano acted as the controller. It was very simple and low-cost, yet it worked well on both the whiteboard and paper.

The only real drawback was its speed. Moving in straight lines was fine, but whenever the robot needed to rotate on the spot in order to change its direction, turning took quite a long time.

To solve this, I redesigned the chassis and rotated the motors 180°. Now the wheels faced inward, toward the pen. The improved geometry with a reduced wheel distance decreased the number of wheel revolutions needed for spinning the robot, making turns much faster while the speed of linear movements stayed the same.

But the rotation speed gain came at a price. The robot was less angular precise, meaning that over time, it would lose its orientation and turn slightly. This didn't bother me too much, though. I knew from the beginning that the cheap gears in the stepper motors had quite a bit of backlash, and I was positively surprised anyway by how well the robot could keep its orientation and position in a 2D Cartesian coordinate system only with trigonometric computations and without external reference.

Keeping It Modular

The second-generation chassis was made from several 3D-printed parts, stacked in layers on four threaded rods. This modular construction allowed me to easily replace layers and test new ideas without having to print the whole robot again.

All versions of the robot had two switches to detect collisions, mounted behind a ring, 10 cm in diameter, which can slide slightly back and forth and acts as the actual bumper. This way, the robots could sense the whiteboard's outer frame as well as other machines drawing on the same surface, while ensuring they couldn't get tangled with anything.

In addition to that base setup, I tried different variations for the bottom layer.

One version had a piece of felt at its bottom for cleaning the whiteboard, as this was one of the initial ideas Felix and I pursued. In order to clean properly, this required some additional magnets: one on top of the felt to pull the cleaning pad onto the board, and more at the bottoms of the motors to increase grip for the tires.

Another version of the robot's bottom layer contained a white LED and a photodiode, "looking" through a pinhole down onto the drawing surface in front of the pen. Now the robot could optically sense brightness contrasts, like lines that had already been drawn. I found this setup very satisfying to explore, as the robot could interact with the drawing itself, which led to very captivating aesthetic results.

I shifted away from drawing entire pre-programmed vector graphics. Instead, I focused on investigating simple algorithmic behaviors, where the bumper switches and the optical sensor give the robot sensory feedback and where the robot's behavior defines the visual outcome of the drawing. Even without using the cleaning bot, the whiteboard remains quite useful, as it allows me to try out different algorithms on a large drawing surface without wasting paper.

Behavior, Perception, and Gestalt Psychology

When experimenting with the optical sensor bot, I quickly noticed something interesting. I had given the robot a simple rule: each time it crossed a line, it would lift or lower the pen while still following a simple trajectory (e.g., a curve or a straight line). Suddenly the drawings gained depth. The interrupted stroke was perceived as a background, while the crossed lines described the edges of a surface that popped out into the foreground.

This reminded me of the Gestalt psychologists in Berlin in the early 20th century (a.k.a. "Berliner Schule der Gestaltpsychologie", or "Gestalttheorie"). In 1923, one of their founders, Max Wertheimer, described six "factors" of perception—today they are mostly known as the "Gestalt principles". They explain how our brain groups things that are close to each other, how we tend to follow continuous paths, close gaps, and separate figure from ground.

[source]

It was fascinating to see how these principles suddenly appeared in the robot's drawings, caused by simple robot behaviors (repetitions, rule-based reactions to existing lines) and leading to surprisingly complex visual results simply because our brain insists on making sense of the drawings. But the Gestalt principles cannot only explain why we perceive depth in the drawings, they also offer plenty of inspiration for algorithms to experiment with.

Logo and Turtles

Developing the code for my robots started with writing some low-level functions in C, such as forward(mm), backward(mm), turn(degrees), penUp(), penDown(), checkSensor(), and checkBumper(). Then I wrote a higher-level program loop where I constructed behaviors using those functions.

Somehow this felt familiar. It reminded me of the educational programming language Logo, which I first read about in the early ’80s in a home computer magazine. Logo featured a "Turtle" on the screen. With simple commands like FORWARD and TURN, one could move the turtle across the screen, and with PENUP and PENDOWN, control whether it left a trace. It was easy to create spirograph-like vector drawings with Logo.

[source]

Reading about Logo became my next rabbit hole. Invented in 1967, it was strongly influenced by Lisp, a professional language central to early AI research and still in use today. While not a direct dialect, Logo carried many of Lisp's core ideas—such as recursion, list processing, and symbolic manipulation—albeit in a simpler, more approachable form for children.

At first, Logo focused on language manipulation. But soon, mathematician, psychologist and MIT AI laboratory co-founder Seymour Papert joined the Logo development team. He had the idea for on-screen turtle graphics, which I described above. But he also thought that a tangible robot that kids could program with the same commands and that would draw on the floor onto large sheets of paper would be ideal to let them explore mathematical concepts. Those robots were built at the MIT AI lab.

[Source]

At the MIT, those robots were called "Turtles", most likely referencing "Elmer" and "Elsie" (ELectroMEchanical Robot, Light-Sensitive), two autonomous robots which neurobiologist and cybernetician William Grey Walter built in 1948/49. They were slow and a bit turtle shaped, therefore Walter also called them "tortoises".

Returning to the fact that it seems like I'm not really contributing anything new to the field: remember how Felix and I witnessed the mini robot battle on the whiteboard, producing generative art? In his 1971 essay "Teaching Children Thinking", Papert already mentioned the idea of playful "battles between computer-controlled turtles" in the introduction. And when it comes to the photo-sensing ability of my robot—Papert described it, too, on the last page of his paper.

[source]

Despite the fact that I didn't come up with any revolutionary ideas after all, digging into the history of turtle robots, revisiting Gestalt theory, and watching my robots draw has been a huge pleasure over the past weeks.

Making your own tiny turtle plotter

If you're a hacker, maker, or tinkerer, chances are you already have the parts in your junk box needed to build a turtle robot by yourself over the next weekend—and I can absolutely recommend it, as it is quite rewarding. I will not provide detailed step-by-step instructions on how to re-create my robots, but I'll happily share my CAD files, the schematic, codes and a BOM, for you to explore and to build upon.

In case you want to improve the robot, I recommend giving it a better power source, as the little power bank is definitely operating at its limits.

Or, if you want to go crazy, you could even add motion feedback, e.g. via one or more optical mouse sensors. That way, the little device might even become quite a practical tool. Imagine being able to draw precise cutting plans straight out of the CAD program onto a large sheet of plywood with a tiny "automatic pen". Well, if you build that thing, please let me know, I might want to have one in my toolbox!

Files

- FreeCAD files for 3D-printed parts

- Schematics

- Simple Arduino "Hello World" code

- Simple HTML vector editor to create a C array of coordinates

Mechanical hardware

- 3D-printed parts

- 4x 10cm M4 threaded rod

- 4x 16mm M4 screws

- 16x M4 nuts

- 2x O-rings, ⌀18.7mm x 2.6mm (I used those)

- 1x Ball roller (I ordered the 10mm high, ⌀12mm version from here. You might have to become a bit inventive if the right size is sold out.)

- 1x Marker pen with max ⌀17mm (e.g. Edding 250, Edding 3000, Staedtler Lumicolor, Stylex Whiteboardmarker, etc.)

Electronics

- 1x Power bank (e.g. those, containing a 18650 cell)

- 2x 28BYJ-48 stepper motors with gearbox (make sure to get the 5V version!)

- 1x Micro servo

- 1x Perfboard, 2.54mm , 17x18 holes

- 1x Arduino Nano

- 2x Micro switches with 28mm lever

- 1x White 3mm LED

- 1x 3mm photo diode (it is unclear which specific type I had in my junkbox)

- 1x WS2812B LED (optional)

- 1x Piezo speaker (optional)

- 1x ULN2803A darlington driver

- 1x 100 Ohm Resistor (optional, for the white LED)

- 1x 10K Resistor (optional, for the photo diode)

- 2x 220 Ohm Resistor (for the WS2812B signal)

- 1x Capacitor, 47uF, 16V

- 2x Diodes 1N4007

- Male and female pin headers

- 1x USB-A connector

- 1x Power switch

Conclusion

What began as a playful evening with a friend grew into an exploration of robotics, art, and perception. Along the way, I rediscovered ideas from Gestalt psychology, early AI research, and the educational visions of pioneers like Seymour Papert.

The surprising part is not that these ideas already existed, but how alive they feel when rebuilt today. A simple robot with stepper motors, a pen, and a sensor can still create drawings that are both fascinating and puzzling.

Researching old papers, photos, and film clips has also been a joy. There are plenty of resources online. If you want to dig deeper, here are a few links worth checking out:

- The youtube channel of Cynthia Solomon, one of the co-inventors of Logo, contains many historic films and videos

- A "History of Turtle Robots"

- Cyberneticzoo about the Logo Turtle

- "The Daily Papert", a website dedicated to Seymour Papert

- Using a MIT turtle robot with an Acorn computer contains some nice old photos

- Turtle graphics in Python

- "Untersuchungen zur Lehre von der Gestalt II" by Max Wertheimer

- This pages' videos in HQ

- Project photos in my diary